We introduce the weightwatcher (ww) , a python tool for a python tool for computing quality metrics of trained, and … More

Category: Uncategorized

Towards a new Theory of Learning: Statistical Mechanics of Deep Neural Networks

Introduction For the past few years, we have talked a lot about how we can understand the properties of Deep … More

This Week in Machine Learning and AI: Implicit Self-Regularization

Big thanks to and the team at This Week in Machine Learning and AI for my recent interview: Implicit Self-Regularization … More

SF Bay ACM Talk: Heavy Tailed Self Regularization in Deep Neural Networks

My Collaborator did a great job giving a talk on our research at the local San Francisco Bay ACM Meetup … More

Heavy Tailed Self Regularization in Deep Neural Nets: 1 year of research

My talk at ICSI-the International Computer Science Institute at UC Berkeley. ICSI is a leading independent, nonprofit center for research … More

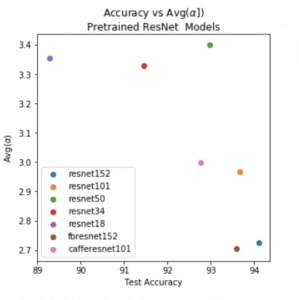

Don’t Peek part 2: Predictions without Test Data

This is a followup to a previous post: DON’T PEEK: DEEP LEARNING WITHOUT LOOKING … AT TEST DATA The idea…suppose … More

Machine Learning and AI for the Lean Start Up

Machine Learning and AI for the Lean Start Up My recent talk at the French Tech Hub Startup Accelerator

Don’t Peek: Deep Learning without looking … at test data

What is the purpose of a theory ? To explain why something works. Sure. But what good is a theory … More

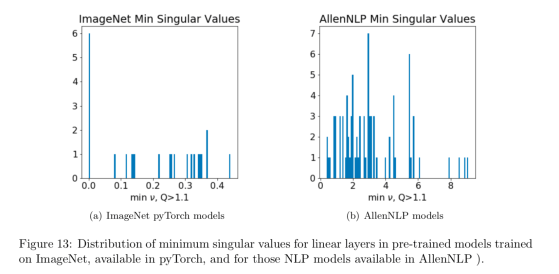

Rank Collapse in Deep Learning

We can learn a lot about Why Deep Learning Works by studying the properties of the layer weight matrices of … More

Power Laws in Deep Learning 2: Universality

Power Law Distributions in Deep Learning In a previous post, we saw that the Fully Connected (FC) layers of the … More